Mind to Screen: Meta's Brain Tech & This Week's AI Explosion

Meta's Brain2Qwerty turns brainwaves into text with 80% accuracy, AI and robotics saw major leaps, and Derek Sivers reminds us most problems aren't real - just echoes of the past or fears of the future.

It's been a crazy week for AI and robotics, so Thing 2 will be a bit longer, but there's videos... 🙃

Thing 1 - Typing With Your Mind!

You’re sitting there, thinking about what you want to say, and bam - your thoughts turn into text on a screen. No keyboard, no voice, just pure brainpower. That'd be nice...

Meta just dropped two mind-blowing papers showing they've cracked this code with 80% accuracy - and they’re doing it without cracking open your skull.

What's Going On?

Meta's cooked up something called Brain2Qwerty, a tech that reads your brain waves while you type and turns them into words. They used fancy gear like MEG (think MRI but for brain signals) and EEG (a cap full of sensors) to pull this off. In the lab, people typed sentences and the system decoded their brain activity in real time.

For the best participants, it nailed 81% of the letters with MEG. That’s wild! On average, it’s more like 68%, so it’s not perfect yet - but I can see the result piped through an LLM for cleanup, like what I do in smart-dictation.

The Catch (There's Always One)

Here’s the rub: this isn’t ready for your living room yet. The MEG machine is a beast - think $2 million and a special shielded room. You’ve got to sit still for hours while it learns your brain (20 hours of training per person!). EEG’s cheaper and portable, but it’s way less accurate - just about 33% right. So, don’t toss your keyboard just yet.

What's Next?

Meta's not rushing this to stores, it's more about figuring out how your brain works to make smarter AI. But imagine a world where this shrinks into a sleek headset you can wear anywhere.

I for one am getting it asap. The showers are gonna get a lot more productive lol

Thing 2 - AI of the week

- Figure introduced Helix, a generalist Vision-Language-Action (VLA) model that unifies perception, language understanding, and learned control to overcome multiple longstanding challenges in robotics

- xAI unveiled Grok-3, a new AI model with advanced reasoning capabilities, achieving state-of-the-art performance in math, science, and coding, and ranking first on Chatbot Arena.

- 1X introduced NEO Gamma - humanoid robot built for home

- Google launched an AI 'co-scientist' built on Gemini 2.0, a multi-agent system designed to accelerate scientific discoveries by generating and validating new hypotheses in fields like medicine and genetics.

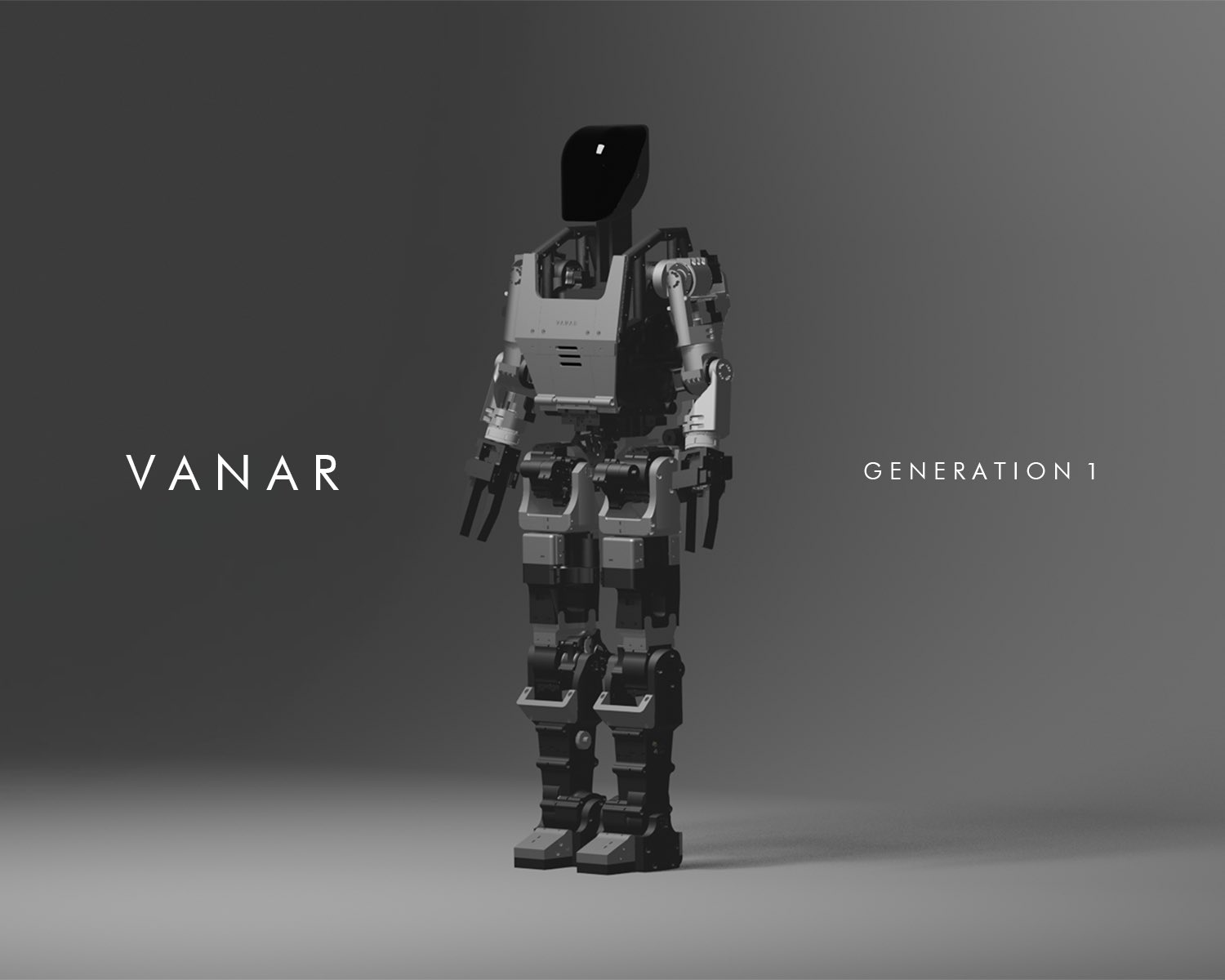

- Vanar showed their humanoid prototype

- Microsoft introduced 'Muse,' an AI model capable of generating cohesive minutes of gameplay by predicting 3D environments and actions, and has been open-sourced.

- Chinese researchers introduced BEAMDOJO, a reinforcement learning framework that trains robots to navigate uneven terrains like stepping stones and balancing beams.

- Arc Institute and Nvidia released Evo 2, the largest publicly available AI model for biology, trained on over 9 trillion DNA blocks from 128,000 species, achieving 90% accuracy in predicting cancer-related gene mutations, and made it open-source.

- Georgia Tech and Meta shared a new video demonstrating the use of Meta’s Project Aria glasses to train humanoid robots, developing an algorithm that leverages human data to expedite robot learning.

- Clone Robotics unveiled Protoclone, a bipedal musculoskeletal android featuring over 200 degrees of freedom, more than 1,000 Myofibers, and 500 sensors; its pneumatic architecture enables complex movements, supported by an external pump.

- Mira Murati (ex-OpenAI CTO) announced the launch of Thinking Machines Lab, a startup focused on developing adaptable and open AI systems, attracting researchers from OpenAI, Mistral, DeepMind, and more.

- Perplexity open-sourced an unbiased, post-trained version of DeepSeek R1, addressing previous censorship concerns by creating a diverse dataset of over 1,000 examples to "uncensor" R1 while maintaining its mathematical and reasoning capabilities.

- Stanford open-sourced 'MedAgentBench,' a benchmark designed to evaluate medical AI agents across 300 clinically relevant tasks in 10 categories, requiring interaction with FHIR-compliant environments. As predicted.

- Sakana AI unveiled an AI CUDA Engineer that automates the production of highly optimized CUDA kernels, utilizing evolutionary LLM-driven code optimization to enhance the runtime of machine learning operations, achieving 10-100x speed improvements over common PyTorch operations.

Thing 3 - Most problems

Most problems are not about the real present moment.

They’re anxiety, worried that something bad might happen in the future.

They’re trauma, remembering something bad in the past.

But none of them are real.

- Derek Sivers

They do feel real in the moment, tho. So you just need to step out of the moment, no? 🙂

Cheers, Zvonimir