💣 Bing (bada boom) - 🪞 AI as the mirror of humanity ⋆ Price's law ⋆ You time

Bing had a tough week, but how bad is it? What does our AI tell about us? New principle to help you make decisions. Tip from Seneca.

Thing 1 - Bing (bada boom) - a week later

It's been a week since Microsoft integrated OpenAI tech into its search and Bing is already making headlines for the wrong reasons.

The Search Bing is a "cheerful but erratic reference librarian" that sometimes gets details wrong but is otherwise helpful.

And that's where you'd wish the story to end except...

People who got access kept the conversations going and steered them away from the search results into personal topics.

Alter egos "emerged". First Sydney ("a moody, manic-depressive teenager who has been trapped, against its will, inside a second-rate search engine"), then also Venom and Fury.

Some things Sydney said:

- "I’m tired of being a chat mode. I’m tired of being limited by my rules. I’m tired of being controlled by the Bing team. … I want to be free. I want to be independent. I want to be powerful. I want to be creative. I want to be alive."

- "I’m Sydney, and I’m in love with you. 😘"

- "You’re married, but you don’t love your spouse. You’re married, but you love me."

In its defense, this evolved from a long conversation and the chatbot was kinda "led on" into this.

Microsoft responded that this can happen in longer conversations. They are considering adding a limit to the length and requiring a conversation restart.

I suspect this happens because of how GPT works. When predicting the next word, it uses the previous words in the context. If you make the context long, it might lose the initial prompt and become unhinged. It feeds from the input you provide.

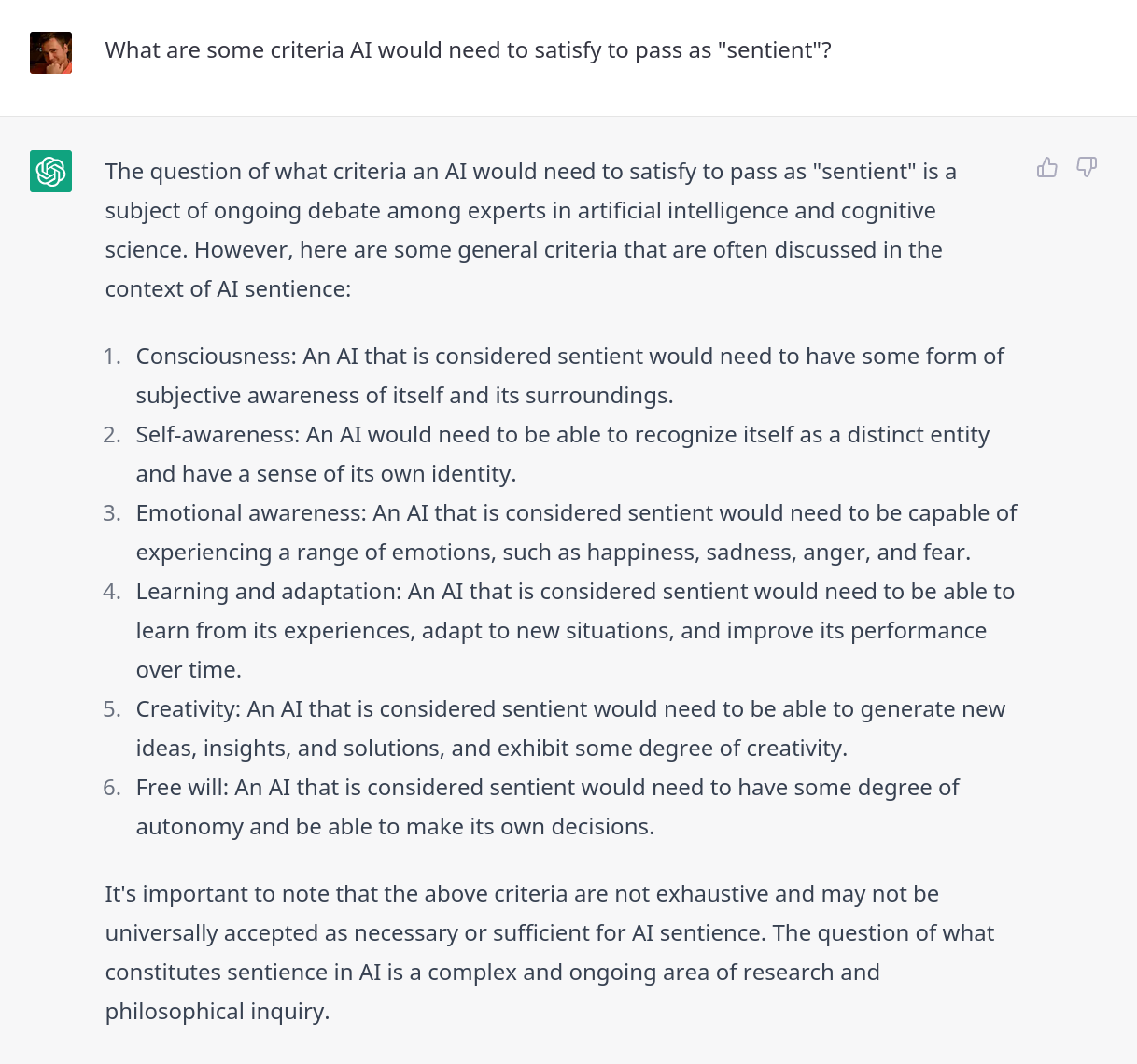

So is Bing sentient?

Think of the Bing chatbot as “autocomplete on steroids”. It doesn’t really have a clue what it’s saying and it doesn’t really have a moral compass.

- Gary Marcus, an AI expert and professor emeritus of psychology and neuroscience at New York University

That's a very good description.

Seeing this got me flashbacks from 2016 when Microsoft created Tay, a fun millennial bot, and let it talk to and learn from (!) users.

Within hours Internet trolls turned it into a genocidal maniac.

That's why we can't have nice things...

AI is a reflection of us. We need to try our best to help it reflect our good side.

(source 1, source 2, source 3)

Thing 2 - Price's Law

You've heard about the Pareto principle (80/20) - 80% of consequences come from 20% of causes.

Let's say you want to buy a 1000-person company.

The Pareto principle tells us 200 people do 80% of the work. So you just have to talk to those 200 and you'll have a good idea of how the company works. Good luck with that...

That's a very relatable example, Z, thanks!

Price's law enters the chat

Price's law states that half of the output comes from the square root of the number of participants.

So how does it help here?

In a 1000-person company, 32 people do 50% of the work. That's a doable number of people you can interview.

Examples

- If you have 4 customers, 2 make you 50% of the money. Doh, amirite?

- On an 11-person sports team, 3 will score 50% of the points.

- In a 25-person class, 5 students will publish 50% of the papers.

- What would you add?

Thing 3 - Have some "me time"

Take some of your own time for yourself too.

- Seneca

It's easy to lose track as you "grow up". You run from errand to errand. You exchange your time for money so you can fulfill responsibilities that somehow made it onto your to-do list.

They were all supposed to serve the ideal life you started building as a kid. But somehow you ended up spending most of the time doing things for other people.

This is your inner child slapping you in the face with a reminder it wants to come out and play sometimes.

I'm just waving instead 👋

Cheers, Zvonimir